Zombox

Year: 2019 Role: concept, development

Zombox is a game that lets you coach a team of AI box pushers. It’s also a tiny research project into machine learning and game design, that resulted in us authoring a paper for IEEE MIPR 2020.

I created this game prototype with Jan Dornig during Global Game Jam ‘19. We were both passionate about game design and AI, and so decided to use this opportunity to explore these two topics together.

Typically, when game and machine learning meets, it’s in the context of games being used to test ML algorithms, rather than the method being used to support the games. Even when it does, it’s usually used for game production, instead of being part of the gameplay.

Therefore, we wanted to see if machine learning can be used as a game mechanic.

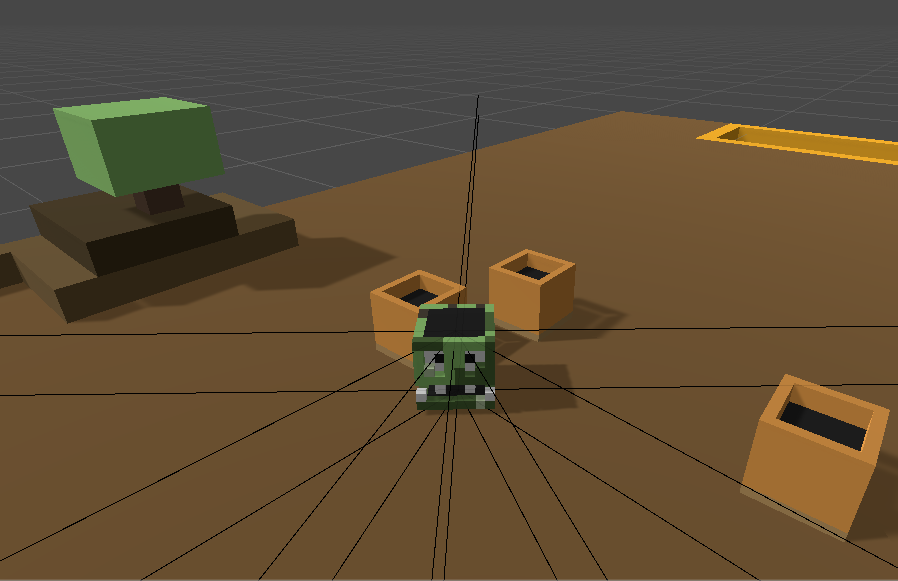

Gameplay

The game is played as a two-player local multiplayer sports game. The two teams share a battlefield scattered with boxes, which they need to fight to push into their respective goal area.

The players act as the Zombox King, who must demonstrate to its minions how to play the game. They also have a button to wipe memories from the zomboxes’ mind, in order to retrain them with a new strategy. Whoever gets to 20 goals first is the winner.

Development

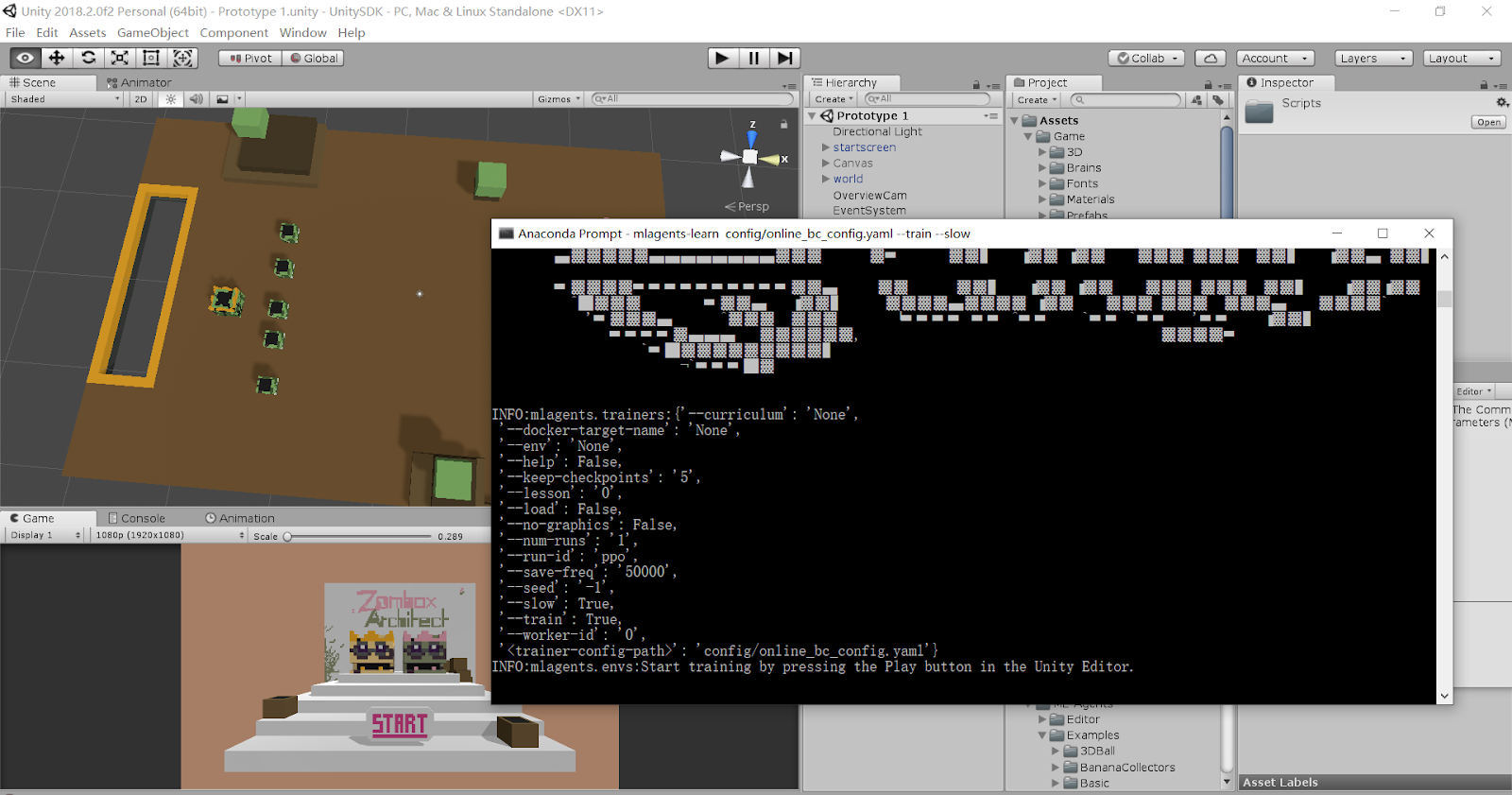

We made the minions mimic the player’s behavior by setting them up with Unity’s ML Agent Toolkit. Both the player’s character and their minions are equipped with the same set of raycasters as “sensors”. The player records “clips” of their actions, which contains their movements and input from the raycasters. Then, the minions learn via Behavioral Cloning provided by the toolkit, using OpenAI’s Proximal Policy Optimization under the hood.

Since the ML Toolkit at the time was not designed with this training-as-game-mechanic usage in mind, our setup for the game was definitely a bit strange. You can read more about the development process in our blogpost on Medium.

Result

Unfortunately, it seems that the training efficiency was too low and the behaviors too uncontrollable for this particular gameplay to work well. While the minions started going for the boxes soon after demonstrations, they could not learn to work as a team, or create effective strategies. Perhaps these can be learned with enough samples, but we could not see that happen before the players get frustrated.

Still, we are naively optimistic that machine learning applied this way opens up brand new forms of play. Imagine a long-running 4X strategy game where the player’s soldiers learned to fight like their king. Or a multiplayer game like Gladiabots, where the player plays the metagame of coming up with strategies one step ahead of the opponent.

Some ideas for the next iteration includes: pre-training the minions with reinforcement learning to improve their effectiveness; allowing the king to selectively train individual minions, so that they can take on different roles: defender, striker, perhaps goalie too. There has been strides made in ML progress too, and we look forward to seeing if the newer, more efficient or intuitive training methods would allow more interesting behaviors to emerge.

Notes mentioning this note

There are no notes linking to this note.