Cyborgism

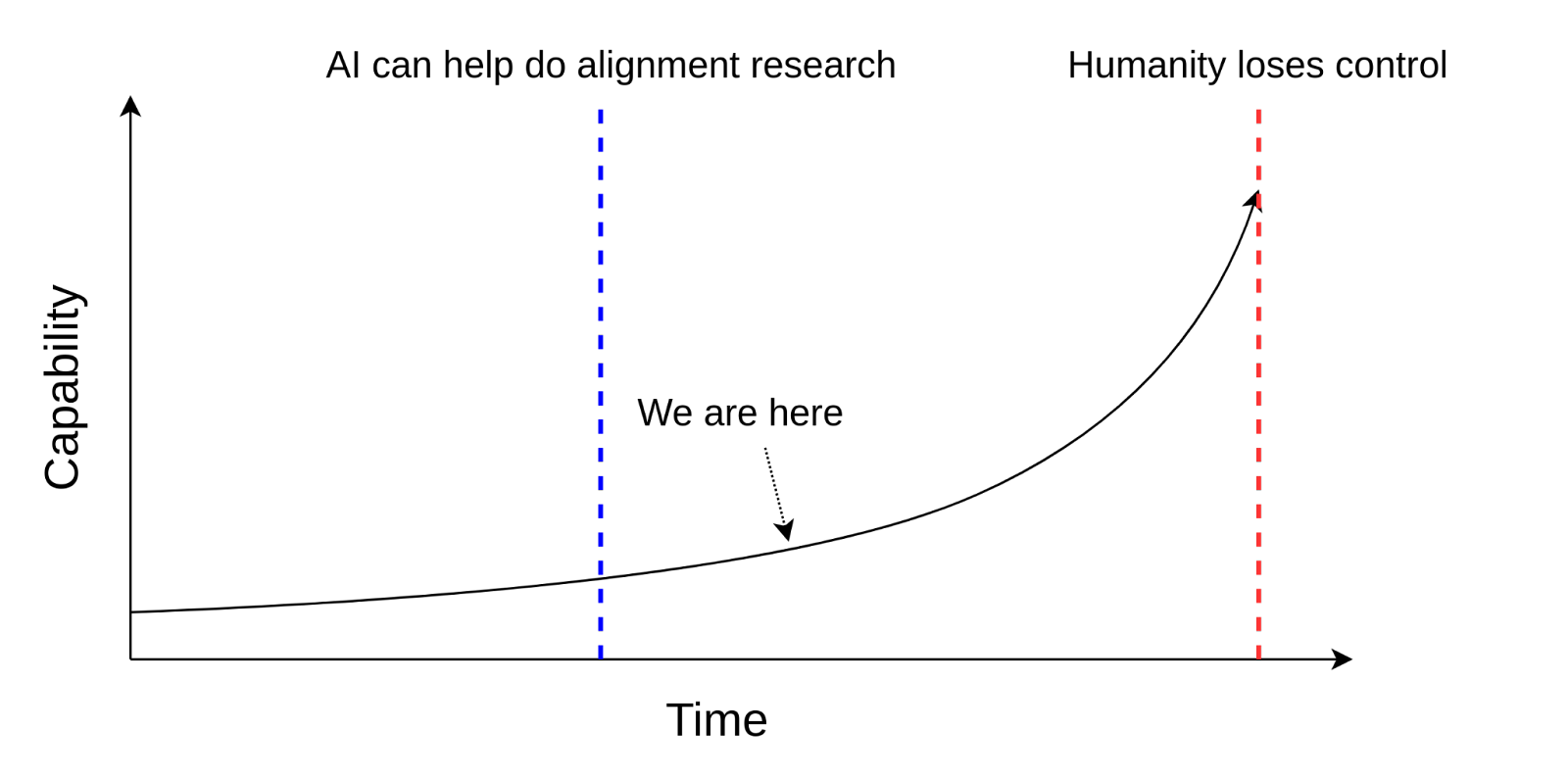

Core idea: human-in-the-loop AI system that accelerates human capability. Instead of automated alignment research, which will likely lead to ruin.

Readings & reading notes

LW sequences: Cyborgism by janus. Also good to read Simulators, which proposes another way to look at what systems like GPT really are.

From the post:

The intention of this post is not to propose an end-all cure for the tricky problem of accelerating alignment using GPT models. Instead, the purpose is to explicitly put another point on the map of possible strategies, and to add nuance to the overall discussion.

We currently have access to an alien intelligence, poorly suited to play the role of research assistant. Instead of trying to force it to be what it is not (which is both difficult and dangerous), we can cast ourselves as research assistants to a mad schizophrenic genius that needs to be kept on task, and whose valuable thinking needs to be extracted in novel and non-obvious ways.

What we are calling a “cyborg” is a human-in-the-loop process where the human operates GPT with the benefit of specialized tools, has deep intuitions about its behavior, and can make predictions about it on some level such that those tools extend human agency rather than replace it.

Loom

GPT generates tokens one at a time, probabilistically. Loom lets you see the generated results as a tree of probabilities instead of just one sentence built from the highest probability tokens.

My understanding: it

- lets you see more outputs from GPT at once

- better visualizes the “thought process” of GPT. Or what it’s actually doing. The article also points out that by seeing more outputs as they get generated, we can also go down the branches we want, thus “guide” GPT’s thinking.

Directions

The object level plan of creating cyborgs for alignment boils down to two main directions:

- Design more tools/methods like Loom which provide high-bandwidth, human-in-the-loop ways for humans to interact with GPT as a simulator (and not augment GPT in ways that change its natural simulator properties).

- Train alignment researchers to use these tools, develop a better intuitive understanding of how GPT behaves, leverage that understanding to exert fine-grained control over the model, and to do important cognitive work while staying grounded to the problem of solving alignment.